ImprovAIze

Published:

An AAU AI Bridging project that combines machine learning and wearable sensing devices to develop intuitive audiovisual displays that accurately reflect physical activity and the felt experience of human movement.  Read more

Read more

Published:

An AAU AI Bridging project that combines machine learning and wearable sensing devices to develop intuitive audiovisual displays that accurately reflect physical activity and the felt experience of human movement.  Read more

Read more

Published:

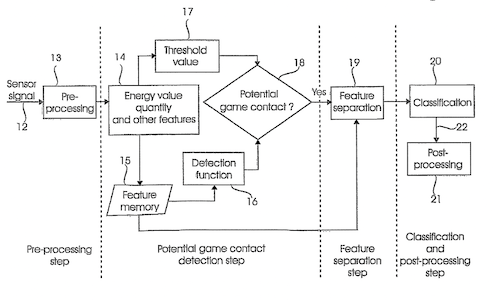

IPR on Bluetooth LTE sensor and ML-based software backend  Read more

Read more

Published:

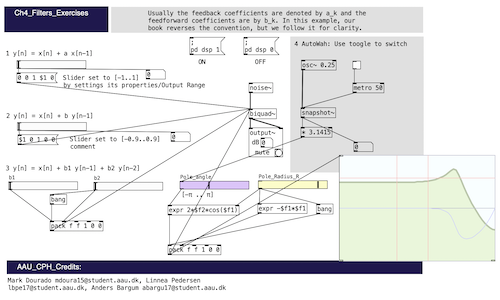

Study material for Audio Processing on Pure Data Read more

Read more

Published:

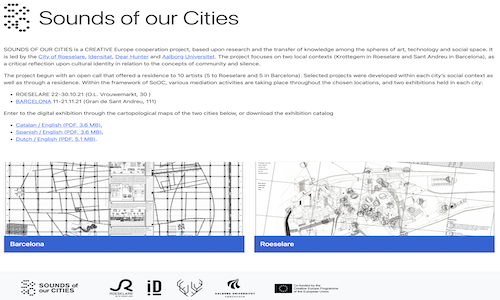

AR-enabled, rich multimedia digital interface to SooC project, supported by Creative Europe  Read more

Read more

Published in 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Valletta, Malta, 2020

Movement generation driven by the real-time MoCap sensor data Read more

Recommended citation: Esbern Torgard Kaspersen, David Gzórny, Cumhur Erkut, and George Palamas, G. (2020). Proc. Intl. Joint Conf. Computer Vision, Imaging and Computer Graphics Theory and Applications - Volume 1, 319–326. http://doi.org/10.5220/0008990403190326

Published in J. New Music Research, 2020

Highlighted the importance of movement-based sonic interaction design and interactive machine learning in VR Read more

Recommended citation: Stefania Serafin, Cumhur Erkut, Amalia De Goetzen, Niels Christian Nilsson, Rolf Nordahl, Francesco Grani, Federico Avanzini, and Michele Geronazzo. Reflections from five years of Sonic Interaction in Virtual Environments (SIVE) workshops. 2020 J. New Music Research, 49 (1), pp 24-34 https://doi.org/10.1080/09298215.2019.1708413

Published in Proc. Sound and Music Computing Conf. (online), 2021

Enhances DDSP with a latent-space representation for singing voice language comprehension. Read more

Recommended citation: Juan Alonso and Cumhur Erkut, 2021. Explorations of Singing Voice Synthesis Using DDSP. In Proc. Sound and Music Computing Conf., p. 183-190, doi:10.5281/zenodo.5043850 https://zenodo.org/record/5043851

Published in Proc. Sound and Music Computing Conf. (France), 2022

Deep-learning real-time feedback delay network reverb as a VST3 using JUCE with CI/CD … Read more

Recommended citation: Søren V K Lyster and Cumhur Erkut, 2022. A Differentiable Neural Network Approach To Parameter Estimation Of Reverberation. In Proc. Sound and Music Computing Conf., p. 354-360, doi:10.5281/zenodo.65733571 https://doi.org/10.5281/zenodo.65733571

Published in IEEE/ACM Transactions on Audio, Speech and Language Processing, 31(99), 256–264, 2022

Pruning most of the deep learning model parameters may improve the sound quality … Read more

Recommended citation: Südholt, David, Alec Wright, Cumhur Erkut, and Vesa Välimäki. 2022. “Pruning Deep Neural Network Models of Guitar Distortion Effects.” IEEE/ACM Transactions on Audio, Speech, and Language Processing 31: 256–64. https://doi.org/10.1109/taslp.2022.3223257

Published in Proc DAFx 2023, Copenhagen, Denmark, 2023

learnable allpass filters optimized via an overparameterized BiasNet network without input audio. … Read more

Recommended citation: Anders Bargum, Stefania Serafin, Cumhur Erkut, and Julian D Parker. 2023. “Differentiable All-pass Filters for Phase Response Estimation and Automatic Signal Alignment.” in Proc DAFx 2023, Copenhagen, Denmark https://arxiv.org/abs/2306.00860

Published in Frontiers in Signal Processin (in review), 2024

Based on scoping review of 100+ papers we outline the best practices of DL-VC priot to transformers. Read more

Recommended citation: Bargum, Anders R, Stefania Serafin, and Cumhur Erkut. 2023. “Reimagining Speech: a Scoping Review of Deep Learning-Powered Voice Conversion.” CoRR. doi:10.48550/arxiv.2311.08104. In Review: Frontiers Signal Processing https://arxiv.org/abs/2311.08104

Published in Proc MOCO 2024, Utrecht, the Netherlands, 2024

Accepted: a soma-design exercise towards active inference of movement and sound … Read more

Recommended citation: Pelin Kiliboz and Cumhur Erkut, 2024. “Multimodal Looper: A Live-Looping System for Gestural and Audio-visual Improvisation” in Proc MOCO 2024, Utrecht, The Netherlands

Published:

Starting from the differentiable IIR filters, I extrapolate the current state of the art in neural audio synthesis towards a research agenda on Differentiable Sound and Music Computing. Read more

Published:

Recent work at the Multisensory Experience Lab pertaining the topic with physics-based audio and movement models beyond deep learning. Hints what’s to come: graphical and physics-based deep learning. Click to learn more about our viewpoint and to watch the talk. Read more

Published:

Impact of Differentiable Digital Signal Processing (DDSP) on the recent work at the Multisensory Experience Lab Hints: We can deploy DDSP-based real-time plugins fast because Read more

Published:

Impact of MLOPs on the recent work at Aalborg University and Multisensory Experience Lab. I emphasized that there is no foundational models for audio yet (but we may expect them soon), and in January 2023, they started to pour in batches! Read more

Published:

In 2024, in many occasions I have pitched for an Edge Intelligence infrastructure that can connect our labs and people’s homes. Read more

Teaching Administration, Aalborg University, 2022

I joined the AAU CREATE Media Technology Study Board, effective 2022-02-01. Read more

Bsc, Aalborg University, 2023

I taught Audio Processing: processering af lydsignaler in MED4: Interactive Sound Systems of the Medialogy Bsc, Copenhagen between 2016-2022, and handed it over to the talented Razvan Paisa. Read more

Supervision, Aalborg University, 2024

This is a container for semester project ideas for the SMC students. Read more

Supervision, Aalborg University, 2024

This is a container for semester project ideas for the MED students. Read more

MSc, Aalborg University, 2024

I am super excited for the new edition of Machine Learning for Media Experiences, with the TA Mubarik Jamal Muuse. Read more